Post

The Ethics of AI: Part Three

Is it ethical (or possible) to constrain intelligent life?

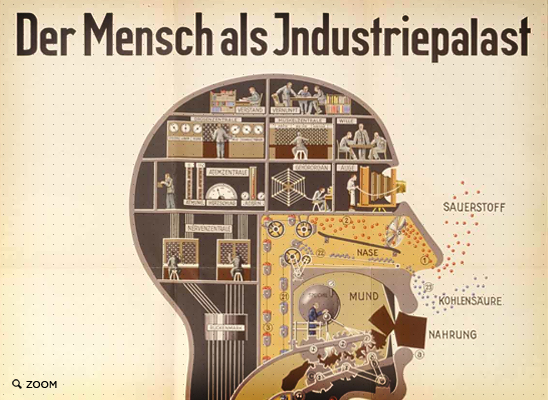

This part of the argument involves what we think it means to be human, and whether creating and adjusting those criteria in an AI affects what they are capable of.

A critical part of being human is having freedom, the freedom to choose one of many possible actions (some we would deem moral and others immoral). It is these choices we make that – combined with environmental factors – produce an individual. The existence of an individual is of critical importance because it is this variety of conditions and decisions that create all of us, the paradigm-shifting geniuses, mass murderers, and utterly mundane.

One problem that arises immediately when discussing moral AI is whether that freedom, and individuality, remains. Perhaps latent in the term “robot” itself is the notion that constraining a personally intelligent machine such that it is incapable of acting immorally would restrict its freedom. If a machine operated in such a way, it could not comprehend the gravity of making an immoral decision, and it would be difficult to differentiate that machine from other instances that (necessarily) operate in the same way.

However, this is not how I would expect to develop moral artificial intelligence. Rather than creating preprogrammed “moral drones” that are unconsciously restricted from acting immorally, I would create an AI that was aware of the full range of possible decisions, but always (or at least often) acted morally. The distinction here is that our machine would want to act morally. By removing whatever evolutionary propensities for immoral behavior, we could expect our machines to “think clearly” and not only recognize the proper choice, but to seek it willingly (as the consequences, however delayed, would be determined to be desirable). The moral machines needn’t be perfect either (that may well be impossible), but even an incremental improvement would be worthwhile.

I believe this type of moral AI would preserve individuality, because it produces moral behavior not by forcing a particular decision, but by ensuring that moral behavior is always desired. It is not difficult to imagine a nearby possible universe whose inhabitants (through whatever tweaks of nature/nurture) evolved in such a way as to emphasize equality and unity. Their slightly altered nervous systems would imperfectly prefer moral behavior, with relevant changes to their emergent social structure (one perhaps untainted by discriminatory or violent tendencies). Easier still, imagine the person you wish you could be. For example, my good doppelgänger is not as easily influenced by social pressures, he does not spend his money frivolously when it would be better donated, and while this makes him a different individual, he is still an individual.

Freedom is a necessary part of being human, as it allows for individual decisions towards good or evil, but what about evil itself, is it too a necessary human component? Can we know what it means to be good (and to make the necessary individuating choices) if there is no contrasting evil?

If we abolish even the conscious propensity for evil, we don’t necessarily lose the ability to differentiate good. For example, I don’t have to kill someone to learn that it’s wrong. If evil has to exist in some form, then even a memory would suffice as a deterrent. Being human and homo sapiens are not the same thing, evil is only a necessary component of the latter.

It is obvious that free will alone does not constitute individuality, something valuable happens over the course of a conscious being’s life that transforms a cloned instance into an individual. Preserving this process in our AI would be essential to ensuring the same unique development that leads to both genius and the mundane (having hopefully eliminated the profane). The most reliable method for conserving these features is by doing it the homo sapiens way. Instances should be unique and plastic: that is, every AI should be created randomly according to a general blueprint and should be highly flexible. This allows for individual talents (and weaknesses) along with an ability to learn and develop over time.

How would we accomplish something like algorithmically improving the moral behavior of a machine? This is obviously speculative, but it is possible that amplifying the activity of mirror neurons could lead to more moral behavior. Mirror neurons, as their name suggests, reflect perceived behavior as neural activity in the perceiver – they are thought to be responsible for learning language, and perhaps, empathy (Bråten, 2007). For example, when you wince at the sight of someone in pain, it is believed that your mirror neurons are firing a similar uncomfortable pattern, possibly creating a need to help assuage their pain (and ultimately your own). A mirror response strong enough would essentially implant the Golden Rule such that any individual’s suffering would be distributed among the “species” and would produce a widespread effort to reduce it.

In this case, it seems that it is not only possible, but ethically advisable to create moral AI. We sacrifice nothing of what it means to be an individual, and can ensure the moral treatment of individuals in society.

Conclusion

This is where we stand: creating a race of artificially intelligent machines is not ethically permissible since using them as a means to an end (which violates Kant’s categorical imperative) does not afford them the respect they deserve as conscious beings. While it is at least theoretically possible to create AI that behaves more morally than us, the cost of the actual implementation of the project (the species-cide of humanity) is too high to justify. It seems there’s no easy way out.

- Bråten, Stein. (2007). On being moved: from mirror neurons to empathy. Philadelphia: John Benjamins Publishing Company.

Archive

-

260.

The Ethics of Practicing Procedures on the Nearly Dead

The report from the field was not promising by any stretch, extensive trauma, and perhaps most importantly unknown “downtime” (referencing the period where the patient received no basic care like...

-

260.

The Ethics of Teaching Hospitals

I can’t imagine what the patient was thinking. Seeing my trembling hands approaching the lacerations on his face with a sharp needle. I tried to reassure him that I knew what I was doing, but the...

-

260.

Conscious Conversation: Behavioral Science

Dr. Eran Zaidel is a professor of Behavioral Neuroscience and faculty member at the Brain Research Institute at UCLA. His work focuses on hemispheric specialization and interhemispheric interaction...

-

260.

Progress Report

Two years down, I’m still going. The next two years are my clinical rotations, the actual hands-on training. It’s a scary prospect, responsibilities and such; but it’s equally exciting, after...

-

260.

Why Medical School Should Be Free

There’s a lot of really great doctors out there, but unfortunately, there’s also some bad ones. That’s a problem we don’t need to have, and I think it’s caused by some problems with the...

-

260.

The Cerebellum: a model for learning in the brain

I know, it’s been a while. Busy is no excuse though, as it is becoming clear that writing for erraticwisdom was an important part of exercising certain parts of my brain that I have neglected...

-

260.

Conscious Conversation: Philosophy

Daniel Black, author of Erectlocution, was kind enough to chat with me one day and we had a great discussion – have a listen.

-

260.

The Stuff in Between

I’m actually almost normal when not agonizing over robot production details, and quite a bit has happened since I last wrote an update. First, I’ve finally graduated. I had a bit of a...

Comments

Hi, Thame,

I think the very source for interest in moral AI may be its undoing. The social human exists in a dizzying cloud of conventions and expectations, which aren’t vetted against any universally intrinsic standard of morality. I wager that this is why we’re interested in our arts as we are: we look to Kafka, to The Wire, to Fallout 3, to Dostoevsky, to The Road and No Country for Old Men, even to Grey’s Anatomy and Dungeons & Dragons, as sandbox experiments in morality, in the art and science of making decisions.

Nowhere can I find directly, nor mention of, a canonical reference for what is and is not moral. Yet, for an algorithm, this is precisely what we’d need: a means of ensuring decidability of the attribution of “moral” or “immoral.” Since even killing humans is not universally immoral (examples abound), how might we ensure decidability of even simpler, less dramatic cases? How portable would this moral AI be? Would a moral AI developed a decade ago, and/or in a completely different culture, cause havoc if transplanted?

The only way I can see around this would be something like quantum decidability, which may exist in “the literature” but which I am postulating in a thoroughly hand-wavy fashion. This model doesn’t actually escape the halting condition above, but might appear to by simultaneously trying different solutions to a given decision problem, creating a “moral superposition,” simultaneous decision phases. Then the local context—here “local” not defined by geography, but circumstance, since the sphere of moral applicability can be as small as a shared elevator and as broad as the planet—decides, making the superposition collapse into a single decision. There’s a joke somewhere in there, about how maybe there is a canonical reference, a universal right, but that it’s too heavily encrypted for us to find it. Note I didn’t say there’s a good joke in there.

It’s a little glib, but I find it ironic that we would make so much use of Turing’s life work in this moral AI, when Turing met his end ultimately in the crucible of conflicted morality of mid-20th-century England.

All that said, it’s a wonderful topic to explore, and I hope I’m gravely wrong. This is one reason a single authoritative God would be so handy.

Daniel Black

Jun 10, 05:05 PM #

Why not send the newly created race to mars. There they could survive as they are machines. They could build their own society and without the “evil” inherent from an evolutionary past, it would be a moral society. This would avoid the genocide of the human race and allow us to continue our rampage on this planet, safe in the knowledge that we have done some good which may just cancel out the destruction we have wreaked upon this planet. Who knows maybe the species we create may find flaws in themselves and seek to create a new, better species and thus begin a cycle which may culminate in the epitome of life, the Gods?

Sam Lennon

Jun 22, 10:59 AM #

Hi Thame!

From your point of view, I agree with your conclusion. However, you are talking about future AI in the same context as most people do: you relate AI solely to machines, i.e. technological constructions, being servants to humanity.

I like to challenge this view, postulating that we will not be able to “construct” AI in a way we program computers or build machines. What humans have done ever since is replicating organic structures technologically but never did we even come close to exactly copy or surpass nature in any field. Given our technological process, we will one day have organic technology, combining the best of both worlds.

So imagine creating AI would be the same as creating a human being(doesn’t matter whether this takes place in a lab or under old-fashioned conditions), but we can e.g. program the genes in a way that this “person” will not know how to act immoral.

As a matter of fact, this would be AI and we would even in some way “construct” such a form of living, however I clearly differentiate between AI in the common sense (conscious robots – which I doubt we will ever have) and organic AI (enriching organic living through technology).

If one would look at your series from my point of view, the conclusion would be different, actually quite similar to Sam Lennon’s: humanity being evolution’s tool for creating a somehow new, higher developed species through self-improvement changes the parameters of your equation. The inevitable result of the new equation is: yes, the creation of AI is ethically permissible and the implentation costs are zero, as the species itself continues to exist, although modified.

Great blog by the way!

Best,

Julian

Julian Raphael

Sep 26, 01:35 PM #

Add a Comment

Phrase modifiers:

_emphasis_

*strong*

__italic__

**bold**

??citation??

-

deleted text-@code@Block modifiers:

bq. Blockquote

p. Paragraph

Links:

"linktext":http://example.com